Innovation to transform your business

Discover the latest tech trends, tips, and industry insights.

Customize your search.

Use filters to navigate our case studies. Find solutions matching your industry, preferred technology, or project type.

Software Development

Explore the key benefits of using version control and release mangement in software development, emphasizing its role in preventing data loss, enhancing collaboration, and improving product quality. See the different types of version control systems and find advice on implementing them effectively. Learn how you can use it for streamlined and successful software projects.

29 Mar 2024

Technology

All Categories

Digital Transformation

Financial technology, or FinTech, is revolutionizing the way we manage, invest, and spend money, making transactions smoother, faster, and more secure. From mobile payments to blockchain technology fintech is bringing financial services into the digital age and making life easier for businesses and people around the world.

26 Mar 2024

Digital Transformation

Technology

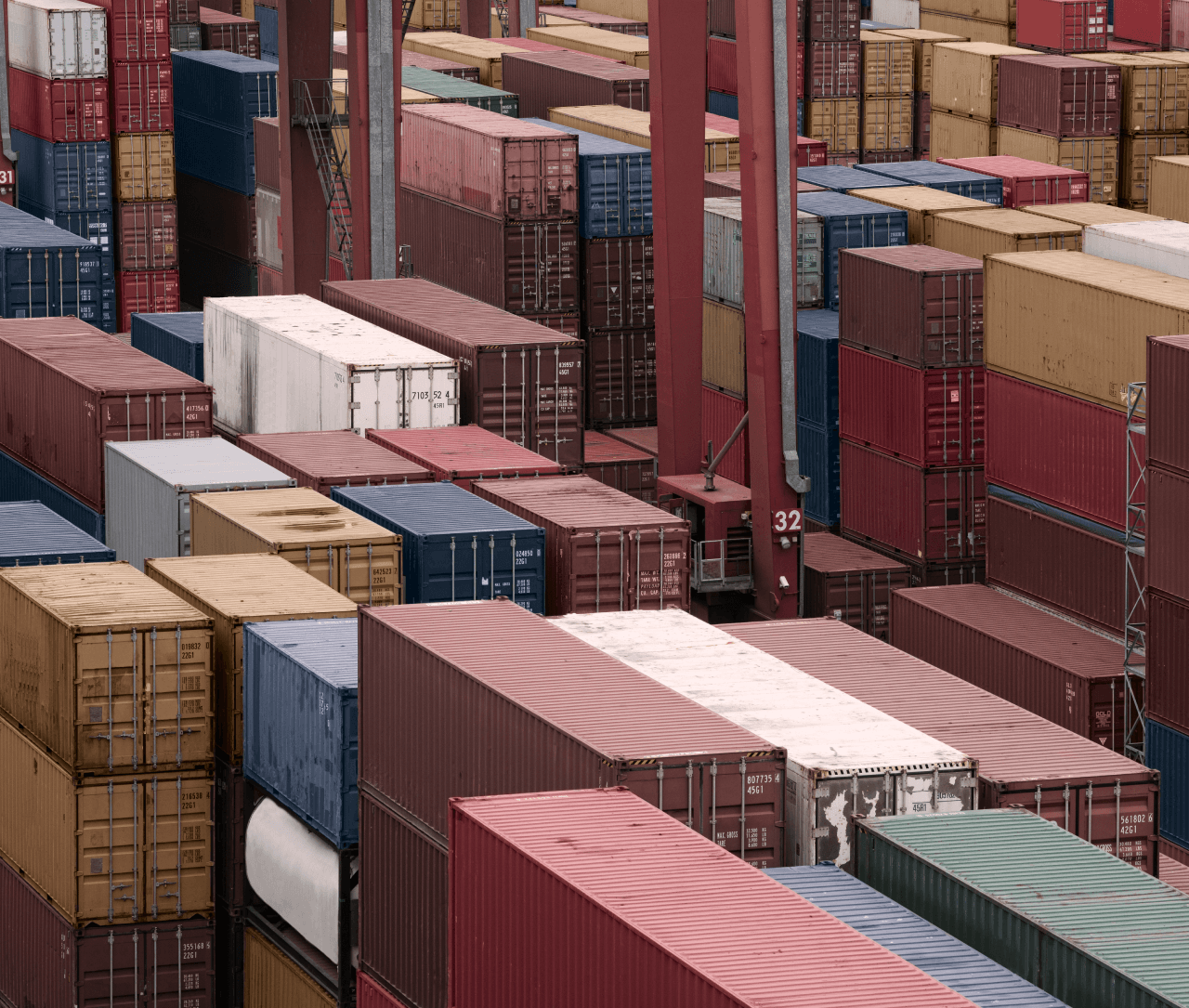

Companies involved in the movement of goods and the management of logistics are undergoing major changes to keep up with the demands of today's customers and the rapid pace of change in the marketplace. What they're doing is making extensive use of digital technologies - that is, using computers, the Internet and other digital tools not just to improve the way they work, but to fundamentally change the way they operate, interact with customers and run their businesses.

18 Mar 2024

Technology

IT Consulting

Digital Transformation

Retail in 2024 is set for a technological leap, driven by the industry's need to respond to growing consumer demands. As market saturation, rising consumer expectations, globalisation, cost reduction, sustainability and regulatory compliance pressures increase, the need for retailers to become more technologically advanced has become even more apparent.

01 Mar 2024

Technology

News

2023 was a year of major technological advances around the world that not only offered hope for a better future but began to make it a reality. Technology has advanced significantly in areas such as AI, quantum computing, AR/VR and environmental technology.

27 Feb 2024

Events

News

Digital Transformation

In today’s rapidly evolving business landscape, #digitaltransformation goes beyond the mere implementation of technology. It represents a fundamental change in the way organizations operate, deliver value, and interact with their stakeholders. This transformation isn’t just about adopting new technologies; it’s about fostering a culture that embraces change, overcomes legacy standards, and is adaptable to change and error.

12 Sep 2023

Software Development

Agile Project Management

Given the dynamic changes in the digital communications landscape, an Eastern European Postal Service recognised the need to modernise and adapt its operations. Aware of our proven track record, they approached us to lead their digital transformation efforts.

29 Aug 2023

IT Consulting

Software Development

Agile Project Management

The digital age is an exciting time for businesses, as new opportunities abound and technology advances quickly. However, it also poses the age-old question: how do we stay ahead of the competition while navigating this ever-evolving digital landscape? At EBS Integrator, we’ve found the answer to this conundrum lies in optimizing work processes and delivering top-notch IT solutions and products. In this article, we’ll reflect on the importance of these processes and explore how partnering with EBS Integrator can benefit your business.

11 May 2023

DevOps

Data engineering

We’re talking about data privacy in all its glory – why it matters to you and your customers, and where it’s all heading in the future. Because this is a part of a series, we’ll try and focus mostly on the aspects that affect us in the eCommerce domain! Let’s discuss why there’s so much hubbub around the topic of data privacy. This is not to say this discussion is anything new for the world, it’s been brought up countless of times, and the issue of “government spying” on you is as old as the idea of structured government is.

18 Feb 2022

Data engineering

DevOps

Technology

Let’s look at the new fad that has its roots all the way back in 1991, and how it is affecting the future of Digital Transformation (DX)! Blockchain and all that makes it work, is it a fad that we’ll forget in a couple of years or is it really the bright future that some people will swear it is!

06 Dec 2021

Artificial Intelligence

Quality assurance

Today we’re discussing the merits of Chatbots and Artificial Intelligence (AI) when it comes to providing optimal customer service! We’ll talk about the history of the first chatbots, why they are important for your enterprise and many other questions! For that purpose, we’ve asked one of our colleagues to give us his opinion!

22 Nov 2021

UI/UX Design

Business Analysis

Customer pain points, everybody even tangentially related to sales talks about them. Heck it’s one of the corner stones you must uncover if you want to successfully automate your business! But what are they? How do you see one coming and how do you deal with it?

07 May 2021

Programming Languages

Let’s take a deep dive in time and look at how computer science & programming languages began. Follow us as we go through the ages and look at the timeline, major personalities, and events that through their ingenuity paved the road to our current Technological and Digital Era.

03 Apr 2021

Programming Languages

Software Development

The world of Data Sciences’ is an ever-changing place, new applications and requirements appear on a daily basis. With all that, a professional SQL-guru who can optimize their interactions with databases is valued in his weight in gold. Luckily for us we have just such master. In this post we hope to explore the world of ORMs (particularely Django ORM vs SQL Alchemy) with our Python specialist and get his opinion on which he prefers! And provide some nifty examples to boot.

08 Mar 2021

Technology

In this article, Alex shares his journey through the implementation of a Clean Desk and Clean Screen Policy during his company's ISO 27001 certification process. Initially resistant to the changes, Alex reflects on the impact of the policy on his workflow, physical security habits, and overall job experience. The transition, while initially perceived as a challenge, ultimately led to a cultural shift within the company, emphasizing the importance of information security and organizational efficiency.

21 Jan 2021

Data engineering

Data Analytics

Peeling this BASICs digital onion, we’ve left some details out (particularly in our last article). As stated, data-centric and data-driven applications deserve their series, a journey we’re kicking off today. Before talking about BigData, strategies and mechanics, let’s filter the messy data-centric vs data-driven dilemma.

24 Aug 2020

Cloud engineering

DevOps

In a previous post, one of my colleagues elaborated quite well on native mobile apps vs hybrid/cross-platform ones. We also promised a follow-up and we felt an iOS team lead could deliver a better outlook on hybrid and cross-platform distinctions. Indeed, since hybrids and cross-platform builds come to aid business flows that require a different approach to mobile development, they are frequently confused as similar methods – which is accurate when comparing them to native builds. However, when compared between themselves, there is a lot to elaborate on – hence, the follow-up requirement. Without further due, let’s dig in and see what are the main differences between a hybrid build and a cross-platform one.

02 Jul 2019

Data Analytics

Data engineering

Technology

2018 is becoming quite promising for augmented reality (AR) and this goes way beyond Pokemon Go. AR is changing the world of consumer marketing. It empowers stakeholders and clients to experience products and services as “prescribed”. It acts as “a sibling” to Virtual Reality platforms aiding physical reality with virtual objects. This way AR is delivering a new, interactive customer experience. Unlike other hypes we’ve seen rising, this tech is here to stay with figures expected to reach $117.4 billion by 2022.

12 Feb 2018